Multi-Teacher Knowledge Distillation: How to Merge the Wisdom of Many Models into One

How to Combine the Strengths of Multiple Teacher Models into One Student Model: A Deep Dive into State-of-the-Art Techniques and Applications

Multi-Teacher Knowledge Distillation: Merging Model Wisdom

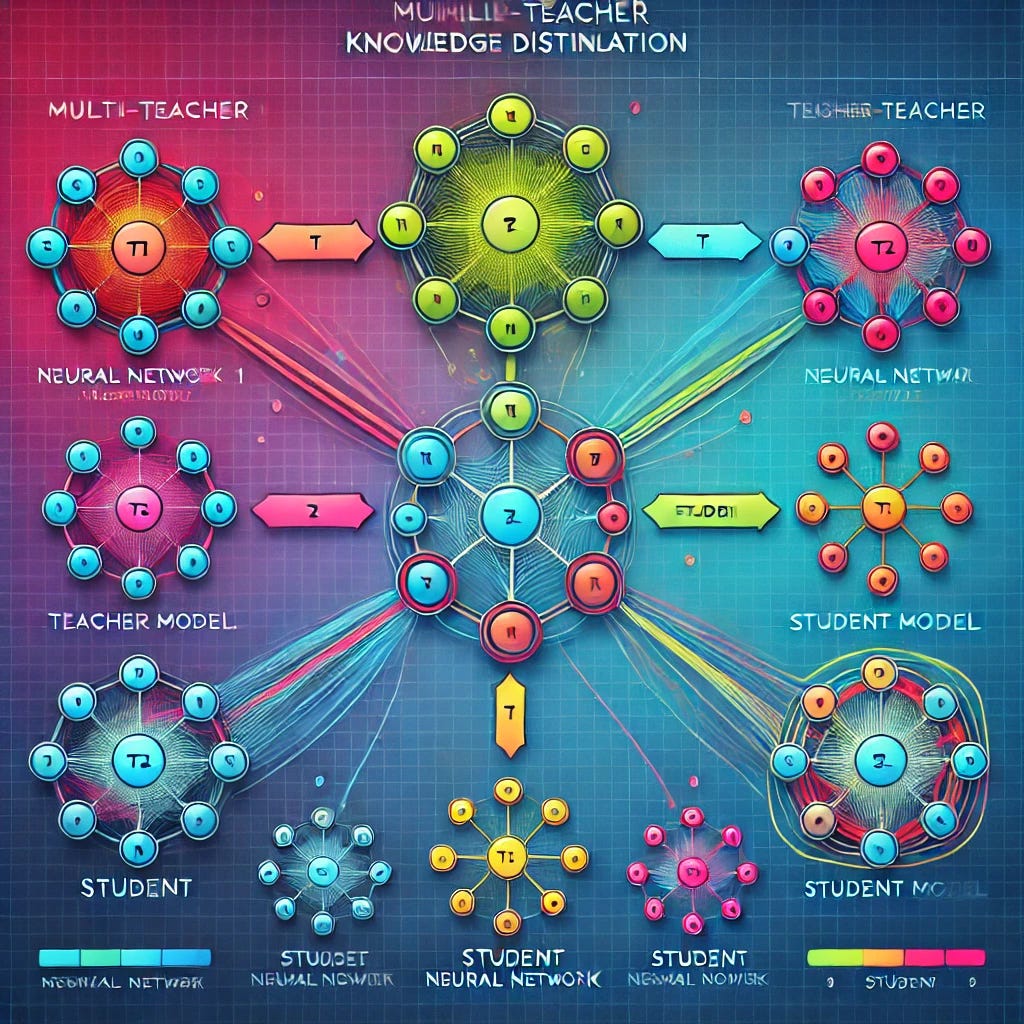

Visual representation of multi-teacher knowledge distillation: combining multiple expert models into one efficient student

In machine learning, model ensembles are known for their robust performance. By combining the predictions of multiple models, ensembles often outperform single models, providing better generalization and more reliable uncertainty estimates. However, the downside of ensemble methods is their high computational cost and increased inference time. To overcome this, researchers have developed a powerful technique known as multi-teacher knowledge distillation, where the knowledge of multiple teacher models is distilled into a single student model. This approach retains the benefits of ensemble learning while significantly reducing computational requirements.

🎯 Introduction

In this blog post, we will delve into the world of multi-teacher knowledge distillation. We will explore its fundamental concepts, discuss the latest research advancements, and highlight key methodologies, including Unified Ensemble Knowledge Distillation, Adaptive Temperature-Guided Multi-Teacher Distillation, and Meta-Learning Approaches. Let’s dive in!

🤔 Why Multi-Teacher Knowledge Distillation?

The primary motivation behind multi-teacher knowledge distillation is to combine the strengths of multiple models while addressing the limitations of using an ensemble at inference time. Here’s why this approach is gaining traction:

Key Benefits:

Enhanced Generalization: By learning from multiple teachers, the student model captures a broader range of features, reducing the risk of overfitting and improving generalization on unseen data.

Efficient Inference: A single student model is much faster and more efficient than an ensemble of multiple models, making it suitable for real-time applications.

Knowledge Aggregation: Multi-teacher distillation aggregates diverse knowledge from different models, which can be beneficial when the teachers have been trained on slightly different datasets or tasks.

Multi-teacher knowledge distillation addresses the fundamental trade-off between model performance and computational efficiency by combining ensemble benefits with single-model speed.

🔬 Fundamentals of Multi-Teacher Knowledge Distillation

Knowledge distillation is a process where a student model is trained to mimic the behavior of one or more teacher models. The traditional approach involves a single teacher model, but in multi-teacher knowledge distillation, the student learns from an ensemble of teachers. The key challenge is how to effectively combine the knowledge of multiple teachers and transfer it to the student.

Core Components:

Multi-teacher knowledge distillation typically involves the following components:

Soft Targets: Instead of using the hard labels (ground truth), the student model learns from the soft predictions of the teacher models. These predictions include probability distributions over the classes, providing richer information about the teachers’ confidence.

Aggregation Strategy: The predictions from multiple teachers need to be aggregated before being used to supervise the student model. This can be done through simple averaging, weighted averaging, or more sophisticated methods that consider the reliability of each teacher.

Loss Function: The student model is trained using a distillation loss, which typically involves a combination of the standard cross-entropy loss and a Kullback-Leibler (KL) divergence loss to match the student’s predictions with the aggregated soft targets of the teachers.

The soft targets from teacher models contain more information than hard labels, as they encode the confidence and uncertainty of the predictions, which helps the student model learn more nuanced decision boundaries.

🚀 Key Techniques in Multi-Teacher Knowledge Distillation

Let’s take a closer look at some of the cutting-edge methods developed for multi-teacher knowledge distillation:

1. Unified Ensemble Knowledge Distillation (UEKD)

Overview

Unified Ensemble Knowledge Distillation (UEKD) is a framework designed to handle both labeled and unlabeled data during distillation. It dynamically adjusts the influence of each teacher model based on their reliability, ensuring that the student learns from the best sources of knowledge.

Methodology

Dynamic Weighting: UEKD assigns weights to each teacher model’s predictions based on their accuracy on a labeled validation set. Teachers that perform well receive higher weights, while weaker teachers have less influence.

Disagreement-Based Learning: For unlabeled data, UEKD focuses on samples where the teachers disagree the most. These are often challenging examples where the student can learn valuable insights by resolving the conflicting predictions.

Dual Loss Function: The loss function combines supervised learning on labeled data and unsupervised learning on unlabeled data. The student minimizes the cross-entropy loss for labeled data and the KL divergence between its predictions and the aggregated teacher predictions for unlabeled data.

Applications

Semi-Supervised Learning: UEKD is particularly effective in scenarios where labeled data is scarce. By leveraging both labeled and unlabeled data, it helps the student model achieve high performance with limited supervision.

Image and Text Classification: UEKD has shown strong results in tasks like image recognition and text classification, where combining knowledge from diverse models can lead to better feature learning.

Reference: Read more about UEKD here

2. Adaptive Temperature-Guided Multi-Teacher Distillation (ATMKD)

Overview

ATMKD introduces an adaptive temperature mechanism to control the difficulty level of the knowledge transferred from the teachers. This method uses different temperatures for different teachers, allowing the student to focus on the most informative predictions.

Methodology

Adaptive Temperature Scaling: The method adjusts the temperature parameter for each teacher dynamically based on their prediction confidence. Teachers with high-confidence predictions are assigned lower temperatures, while those with uncertain predictions have higher temperatures.

Diverse Aggregation Strategy: Instead of simple averaging, ATMKD uses a diverse aggregation strategy that considers the confidence of each teacher’s predictions. This helps in reducing the impact of noisy or unreliable teachers.

Weighted Distillation Loss: The loss function combines a weighted average of the teachers’ soft targets with the student’s predictions, guided by the adaptive temperature scaling.

Applications

Knowledge Transfer Across Domains: ATMKD is useful in scenarios where the teacher models have been trained on different datasets or tasks. The adaptive temperature mechanism helps the student model integrate diverse knowledge sources effectively.

Speech and Language Processing: This method has been applied in tasks like speech recognition and natural language understanding, where the variability in teacher confidence can be high.

Reference: Learn more about ATMKD

3. Meta-Learning for Multi-Teacher Distillation

Overview

Meta-learning approaches have been applied to multi-teacher knowledge distillation to optimize the process of combining teacher knowledge. By using a meta-weight network, the student model learns how to weigh the contributions of each teacher dynamically.

Methodology

Meta-Weight Network: A small neural network, called the meta-weight network, is trained alongside the student model to predict the optimal weights for each teacher’s predictions. The meta-weight network uses features extracted from the input data and the teacher predictions to make its decision.

End-to-End Training: The meta-weight network and the student model are trained jointly in an end-to-end manner, allowing the student to learn the optimal aggregation strategy dynamically during training.

Enhanced Generalization: By learning to weigh the teachers’ predictions adaptively, the student model captures the most relevant information, leading to better generalization on new data.

Applications

Cross-Task Knowledge Distillation: This method is effective when the teacher models have been trained on related but different tasks. The meta-weight network helps the student model leverage the diverse knowledge effectively.

Robustness to Noisy Teachers: Meta-learning approaches can mitigate the impact of noisy or low-quality teacher models, making them suitable for real-world applications where teacher quality may vary.

Reference: Explore more about meta-learning approaches

⚠️ Practical Considerations and Challenges

While multi-teacher knowledge distillation offers significant advantages, it also comes with challenges:

Major Challenges:

Computational Complexity: Training with multiple teachers can be computationally intensive, especially if the teachers are large models like BERT or GPT.

Balancing Teacher Influence: It can be challenging to determine the optimal weighting for each teacher, particularly when their predictions conflict.

Transfer of Intermediate Knowledge: Some methods focus only on the final output predictions, but transferring intermediate features can provide richer knowledge transfer.

When implementing multi-teacher distillation, carefully consider the trade-off between the number of teachers and computational overhead. More teachers don’t always lead to better performance.

📊 Performance Comparison

| Method | Strengths | Best Use Cases | Computational Cost |

|---|---|---|---|

| UEKD | Handles labeled/unlabeled data, dynamic weighting | Semi-supervised learning | Medium |

| ATMKD | Adaptive temperature, confidence-aware | Cross-domain transfer | Medium-High |

| Meta-Learning | Dynamic weight optimization, noise robust | Multi-task scenarios | High |

🎯 Conclusion

Multi-teacher knowledge distillation is a powerful technique that combines the strengths of ensemble learning with the efficiency of a single student model. Methods like UEKD, ATMKD, and meta-learning approaches are paving the way for more effective and scalable knowledge transfer, enabling robust performance across a wide range of applications. As research continues to advance, we can expect even more innovative strategies that push the boundaries of what’s possible in distillation.

Future Directions

- Automated Teacher Selection: Developing methods to automatically select the most suitable teachers for a given task

- Hierarchical Knowledge Transfer: Exploring multi-level knowledge distillation from different layers of teacher models

- Continual Learning Integration: Combining multi-teacher distillation with continual learning for lifelong model adaptation

If you’re interested in implementing these techniques, explore the provided references for in-depth insights and code examples. Have you tried multi-teacher distillation in your projects? Share your experiences and thoughts!

This post is part of my ongoing series on AI for precision medicine and advanced machine learning techniques. Subscribe for more insights into cutting-edge AI research and applications.

Tags: #ArtificialIntelligence #MachineLearning #KnowledgeDistillation #EnsembleLearning #DeepLearning #ModelCompression #AIResearch #PrecisionMedicine